What a burnt marshmallow taught me about AI product-market fit…

…just kidding. This past week I was on the East Coast visiting family, but you can bet that AI still came up.

The best insight I brought home: one relative who works at a defense contractor told me Grammarly is pretty darn good at writing PL/SQL code. At my first job after college I wrote PL/SQL all day, every day, but haven’t touched it since. I wonder what alternate-reality Oracle DBA Andrew Baker is doing right now…

So today’s newsletter is a condensed one. We’ll return to the usual format next week.

Andrew’s picks 🔍

A handful of insightful links which earned a spot in my notes this week:

New data shows who is using ChatGPT and Claude, and for what

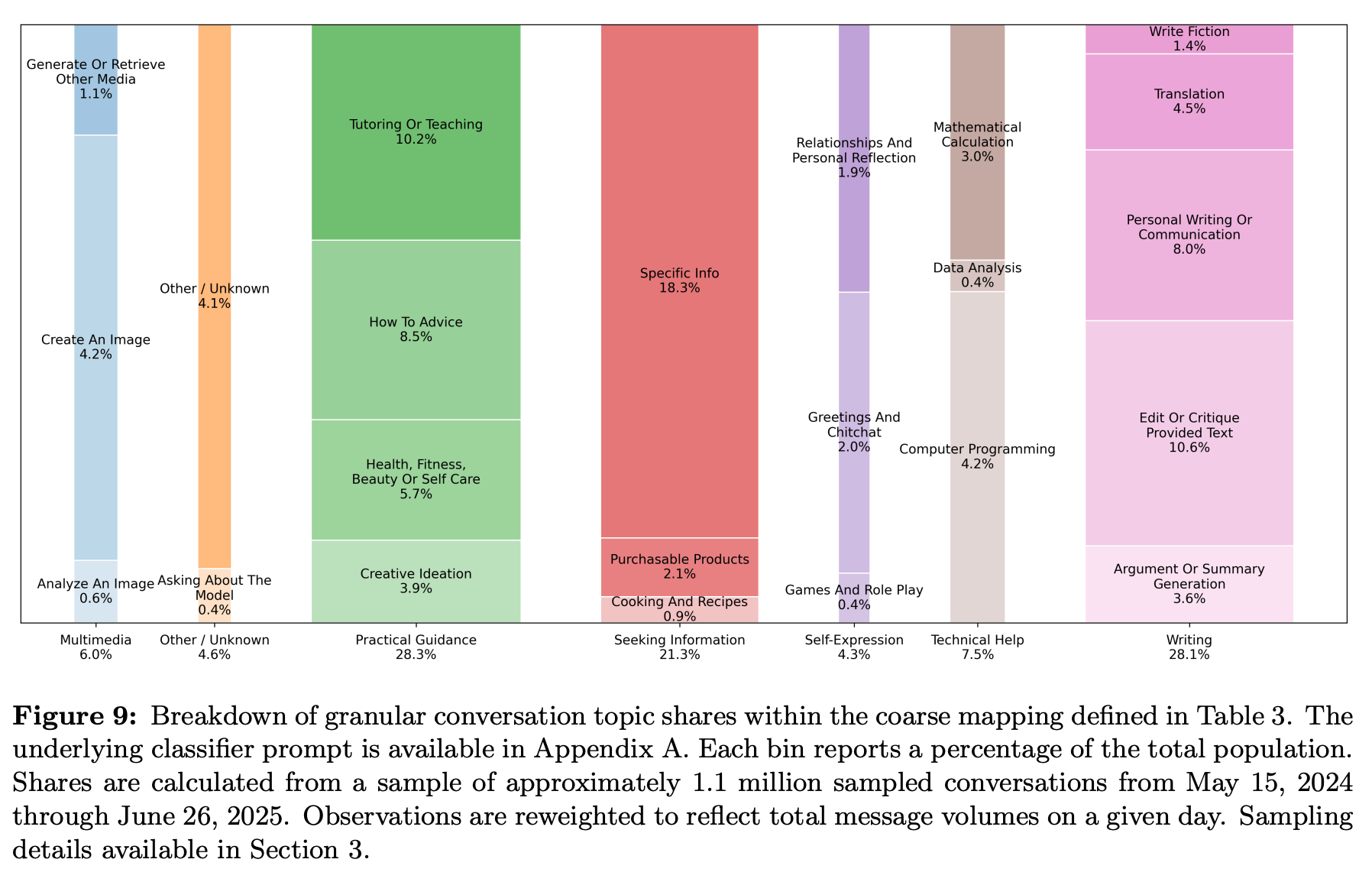

I’m still digesting the reports OpenAI and Anthropic released this month. OpenAI’s focuses primarily on how people are using ChatGPT. Anthropic’s is a good read if you’re more interested in the geography of AI adoption.

Neither quite tells the whole story, of course. The OpenAI report only covers ChatGPT usage, not usage via OpenAI’s APIs (or its new open-weight model, gpt-oss). Anthropic’s dataset does include first-party API data in addition to Claude usage, but third-party API usage is a relatively larger portion of Anthropic’s business.

My early take: I wish the OpenAI report had been able to classify “homework help” as its own category. And the relatively small 2.1% “Purchasable products” category seems like it will be a challenge for OpenAI’s ambitions to use shopping to monetize free-tier ChatGPT users. Overall, however, I’d call this a more balanced spread of use cases than I would have guessed for consumer usage of ChatGPT in 2024-2025.

People still have concerns about how AI will impact their daily lives

It’s interesting to line up the reports above with the latest Pew data, which shows Americans have mixed feelings as AI marches forward. A majority are “open to letting AI assist them in day-to-day tasks,” but twice as many are concerned vs. excited when it comes to increased use of AI in daily life.

It's tough to read deeply into broad surveys like this. What counts as “AI”, anyway? But I do consider it a helpful pulse check on general consumer sentiment, and how that might inform how AI is adopted by employees, incorporated into products, and sized up by regulators.

“An LLM agent runs tools in a loop to achieve a goal”

If you’re like me, you’ve been a bit frustrated this past year at how meaningless the term “agent” has become. So I’m happy to see developer influencer Simon Willison plant the flag on a plain-language definition which is based in how engineers are using LLMs to build products and features today.

To me, this definition passes the smell test. For example, an LLM-powered chatbot which uses Retrieval Augmented Generation (RAG) to reference a company’s internal documentation before generating its response would not be an agent - the “document lookup” step doesn’t run in a loop, and isn’t controlled by the LLM. But the same chatbot would be an agent if it referenced those corporate docs via a tool it controls which it calls zero, one, or many times while generating each response.

Simon's got a knack for coining terms. I hope this one sticks.

What if the agent's goal is someone else's goal?

I’ve been pretty intrigued by the possibilities of what a helpful AI agent could do if it had access to your entire email mailbox. There’s no better single place an agent could go to learn about who you are and what you need help with today.

But even I have been reluctant to grant ChatGPT or Claude unfettered access to my Gmail. I was reminded why while reading about this zero-click attack discovered in ChatGPT Deep Research by a team of security researchers at Radware. The attack revolves around a malicious email sent to the target user which includes hidden instructions. Those instructions fool Deep Research into visiting a site the attacker controls, providing a way to exfiltrate private information contained in the victim’s other emails.

This vulnerability was responsibly disclosed to OpenAI, which confirmed this month it had been resolved. This won’t be the last time an attacker goes after a user’s ChatGPT-connected email inbox, though (especially if users can add third-party MCP servers someday). I’m curious whether some employers will disallow the email integration because its potential for vulnerabilities is too great.

San Francisco’s hottest club is… the office

Or your company’s Slack, at least.

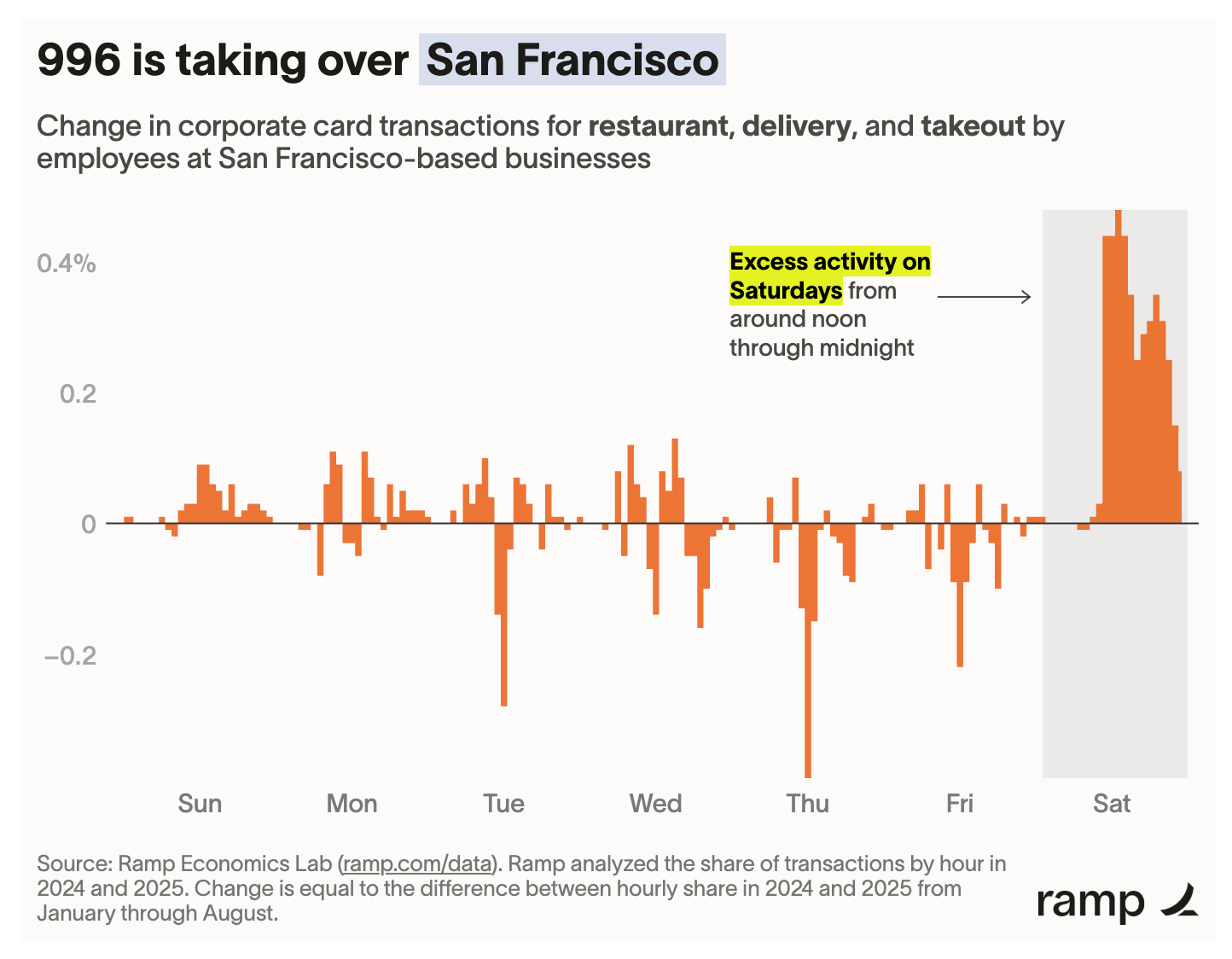

An economist at corporate card purveyor Ramp published some data this month showing that no, it’s not just your imagination: “996” really is catching on in San Francisco.

There are a few interesting data points here: the “Saturday bump” is new to 2025, it’s specific to San Francisco, and it’s not exclusive to software startups.

Next week 📆

That’s it for this week! Got thoughts? Just hit reply or send me a note at andrew@implausible.ai.

I’ll be back with the usual format next week. Subscribe here to get it delivered straight to your inbox.