The state of browser AI agents

Welcome back!

Autumn is off to a busy start in AI-land, not just because of eye-popping headlines around funding and infrastructure. OpenAI and Anthropic both released notable new models, and rumor has it Google will drop Gemini 3 before long. Alibaba’s also been making moves this month with its open-weight Qwen models, a favorite of local LLM fans. OpenAI DevDay happened today - check back next week for analysis.

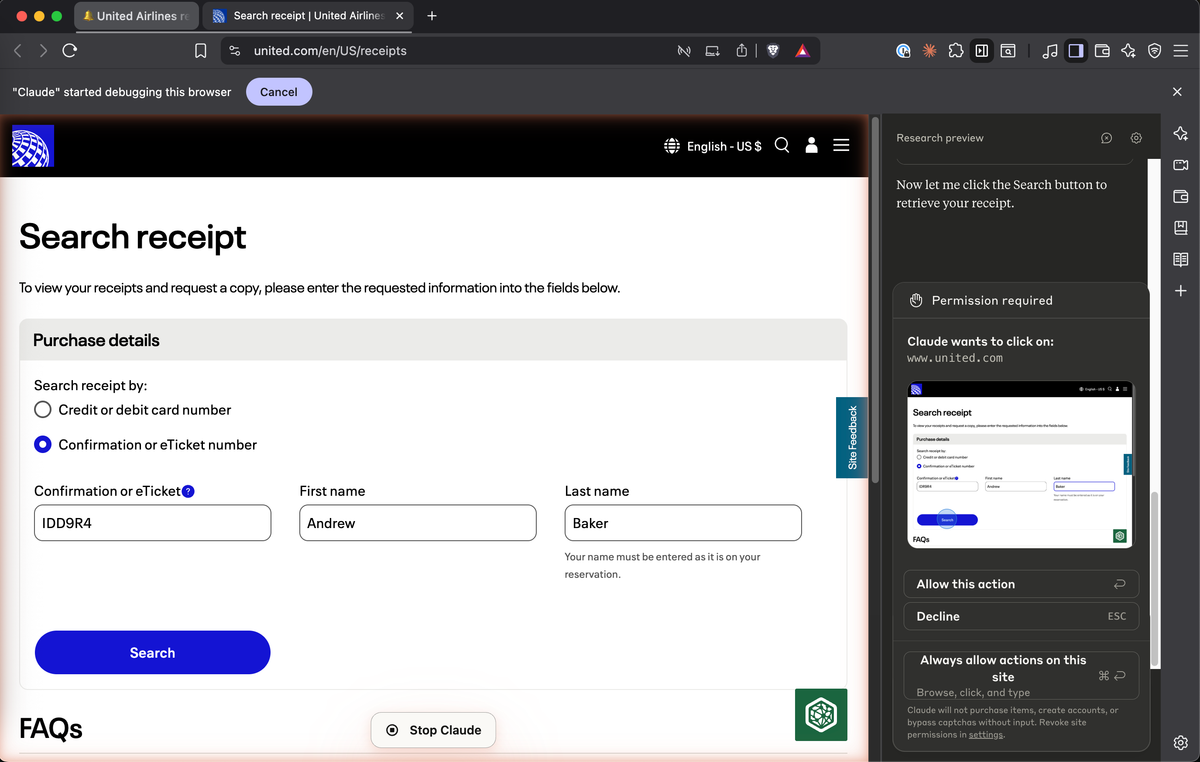

One less-publicized update was that Anthropic opened up its browser-based Claude for Chrome preview to more users. I got my hands on it last week. “Uses a browser” might one day become one of the defining characteristics for what makes an LLM an agent. That’s the focus for this week’s deep dive below.

My thanks to everyone who subscribed and shared their feedback this month. Not subscribed yet? You can sign up here.

Andrew’s picks 🔍

A handful of insightful links which earned a spot in my notes recently.

Sora two ways

On the consumer side of AI, OpenAI released Sora 2 (the model) and Sora (the app). Notably, the Sora app makes it easy for users to create videos with their own likeness. You can also share that likeness with other users, so you and your friends can create videos featuring joint escapades without ever leaving your couch.

I created a rudimentary app like this a few years ago to share with friends and family as part of an annual tradition where I ship a small hack to go with our Christmas card. That one was based on the DreamBooth project.

It took about five minutes for my friends to hone in on the prompt “Andrew at the U.S. Capitol on January 6th.” It was also my most successful Christmas card project by far. It’s hard to say what Sora’s long-term prospects are as a standalone app, but I admire Sam Altman’s personal risk tolerance in sharing his likeness for any user to incorporate into their videos on launch day.

Claude’s imagining things

As part of the Sonnet 4.5 release, Anthropic also opened up a research preview for a new way to generate software with AI they’re calling “Imagine with Claude.” Think of it less as a vibe coding platform and more like a “desktop simulator,” where Claude builds each screen and window in realtime, per your direction.

In terms of how this technique might be applied, I think it’s more helpful to consider it in the same bucket as Google’s GameNGen project, a game engine where each frame is simulated by a neural model.

This approach feels out there today, but if it eventually goes mainstream, we’ll look back on all the AI-assisted coding tools of today as misguided attempts to build “faster horses.”

We gonna rock down to electric AI Avenue 🎶

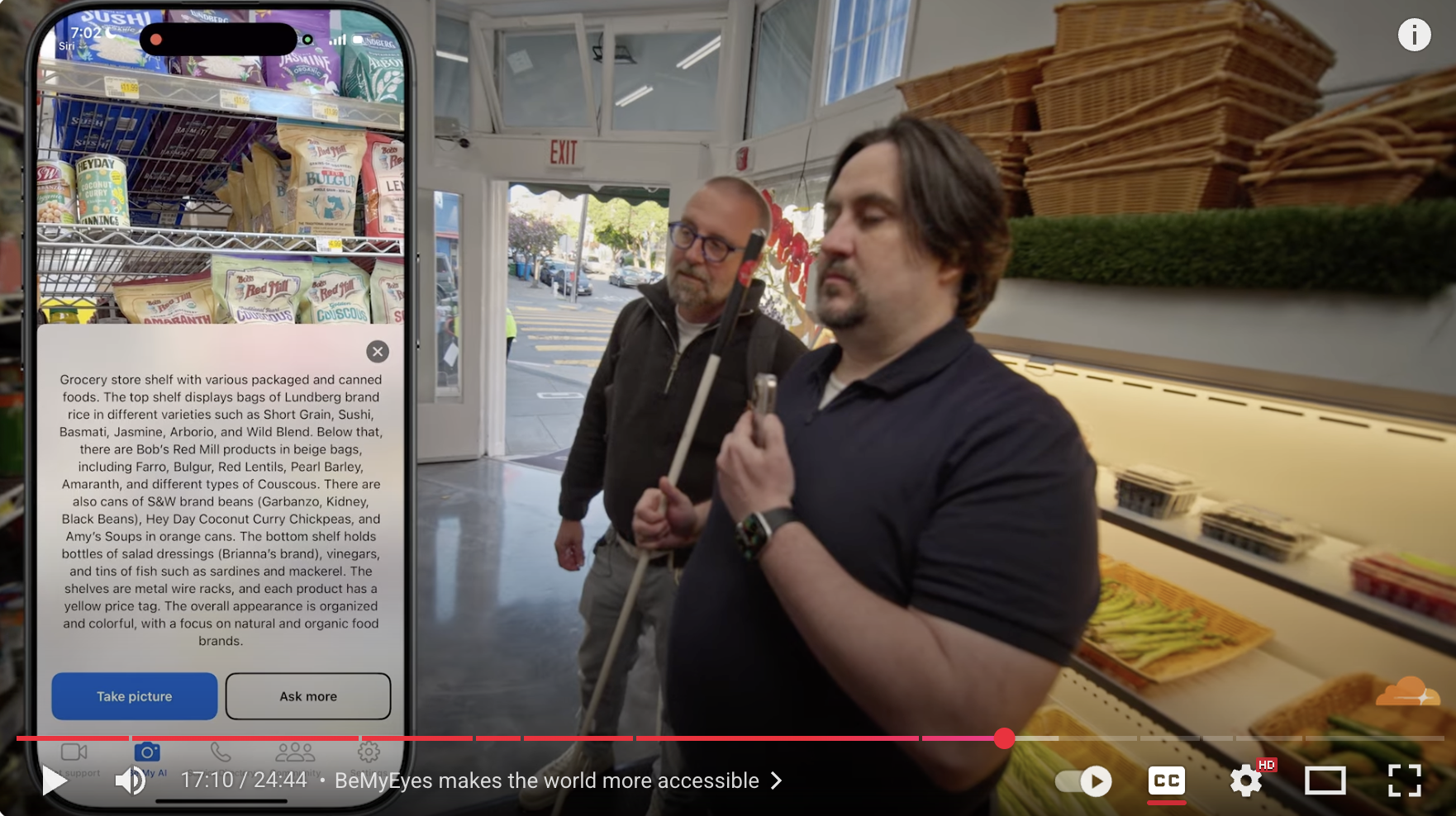

I’m in awe of the polish that went into this new series about AI from my friend Craig Dennis and the team at Cloudflare. My favorite segment so far is in episode 2, when Craig joins a vision-impaired San Francisco resident as he uses BeMyEyes for the first time while grocery shopping.

I had heard a lot about BeMyEyes - it was a prominent launch partner for OpenAI’s early vision products. But AI Avenue was the first time I actually saw BeMyEyes demoed by a person with a disability out in the real world. Definitely inspiring.

KVICK SÖRT

As an IKEA fan who struggled in his algorithms course during undergrad, I greatly appreciate this collection of visual explainers of key computer science concepts. Though my GOAT in this category will always be this paint-based explanation for public key cryptography.

This week: Are AI agents ready for the information superhighway? 🖥️

Last week I highlighted gathering momentum around a crisper definition for what “AI agent” means: “an LLM agent runs tools in a loop to achieve a goal.”

When it comes to making AI agents helpful to knowledge workers, the most important tool an LLM could use might be a web browser. Research tools like ChatGPT Deep Research are a step towards this vision (which I also find pretty useful today). The current frontier, however, is AI agents which can use a browser to reliably accomplish non-research tasks.

Historically, the only way to automate repetitive digital tasks was to build custom software - a solution which is neither cheap nor easy. So as any modern corporate denizen knows well, a lot of human time and energy is expended every day moving information from one website to another.

Why can’t an AI agent use a browser to do that instead?

You get a browser! And you get a browser!

Here in 2025, all the major AI players have released research preview / beta products for browser-based AI agents. OpenAI evolved its early “Operator” project into ChatGPT agent in July. As I mentioned above, Anthropic is expanding access for its Claude for Chrome feature. Google plans to ship Gemini natively within Chrome.

There’s also been a flurry of open source activity in this space, with dozens of open source projects which have varied goals and approaches for how to best help an LLM wield a browser. The two I have used most are Browserbase’s Stagehand and Browser Use.

So how do these tools work?

Generally, they use HTML parsing and/or screenshots plus some clever prompts. The tool then sends that curated context to an off-the-shelf LLM - Gemini 2.5 is a favorite in this community. The LLM responds with either the next instruction for the browser (e.g. “scroll down”, “click ‘submit’”) or that it’s finished with the task (”I’ve added that receipt to your next expense report”).

Most tools are built on top of Playwright, which is a funny example of a rather humdrum automated testing open source project from Microsoft taking on new relevance in the AI age. Here’s the Playwright “hello world” sample code if you’re curious about how these LLMs are actually manipulating a browser.

So with no shortage of tools in this space, how ready are they to go to work?

What I learned from my projects

For most tasks you might dream up, the answer today is: “probably not.” Or at least, not in a way that’s faster, more capable, or less error-prone than a human performing that task themselves.

I spent a lot of time in this space last year while building BetterSeat, my consumer AI agent which helped air travelers get the best possible seat on their next flight. I sold that business, but “tell me my current seat assignments and whether there are better seats available” remains my go-to litmus test for browser AI agents. It’s a great task because it’s trivial for humans but tricky for LLMs: behind that seatmap UI is a pretty complex HTML document.

Today, none of the latest tools and models come close. They often get stuck before even reaching the seatmap UI. When they do make it there, they almost always make an error when reporting the available seats for me to choose from. And the whole process is slow - multiples slower than a human giving the task their full attention.

Another reason why seat selection is a great use case is because there are somewhat serious ways an LLM could make things worse if it goes off script. That six-character confirmation code does more than just let you print your boarding pass: it’s also all the authentication needed to alter or cancel your flight. So if the AI agent gets confused along the way, there’s a chance it could make a real mess.

AI coding agents solve the “unwanted side effect” problem by prompting the engineer to approve every potentially destructive action. Many browser-based AI agents use the same pattern, but the UX of being prompted to approve every screenshot or click is even more tedious than being asked to approve each bash command.

And so that’s where most browser AI agent workflows end up today: too error-prone to be used unsupervised, and too slow to be worth supervising.

The other hurdles waiting on the horizon

So what will it take for browser AI agents to gain broad adoption?

We can probably count on future improvements in the tools and their underlying models to solve the speed and capability issues. But that might just open a new can of worms.

One of those worms is security. Last week’s newsletter included a link to a zero-click vulnerability in ChatGPT which misled the LLM based on malicious instructions it uncovered during a Deep Research session (since patched). It won’t be easy to solve the inherent tension between keeping LLMs helpful and obedient when receiving instructions from legitimate users but obstinate when faced with new instructions from bad actors.

After that, though, the next biggest hurdle might be an economic one. Not the cost of operating these browser agents - that cost is non-trivial today, but is much less expensive than last year. In fact, Perplexity just released their AI-enhanced browser, Comet, as a freemium product - no doubt partly due to improving operating costs. The economic hurdle I’m talking about is the foundation of the web: advertising.

I think often about the quote from an executive in this March article from The Information about DoorDash's role as a partner on OpenAI’s Operator launch:

In a meeting last fall between the companies, a DoorDash executive asked OpenAI managers to “take this to its logical limit,” according to someone who attended the meeting. If only AI bots rather than people visit the DoorDash site, that wouldn’t be so great for DoorDash’s business, the executive said.

Most of the web is built upon the premise that human eyeballs are on the other side of the screen. Google search and social media are the first things that come to mind when you think about the ad-supported web, but ads are propping up margins almost everywhere these days - including inside apps like DoorDash (who went ahead with the partnership anyway).

In the future, will some companies block browser-using AI agents, either via legal or technical means, in order to preserve their advertising revenue? Will OpenAI or Google manually block certain sites from their consumer agents so they can avoid upsetting their corporate customers?

Or things could go the other way: will some sites come to prefer traffic from ChatGPT and other AI agents because they’re an easier audience to sell to than a human consumer? Truly egregious examples would likely get named and shamed, but it’s not hard to imagine AI agent-based commerce becoming a lucrative new medium for price discrimination.

Try it yourself

I’d love to hear about your experience using browser AI agents. What’s your go-to use case?

If you’re looking for tools to try, here’s the list I recommend these days:

- ChatGPT agent - The easiest place to start. Runs entirely within ChatGPT - no browser required.

- Claude for Chrome - Still gated by a waitlist today, but I believe all Claude Max subscribers get off the waitlist quickly. Runs in your own browser via an extension - one solution to the authentication problem

- Browserbase Director - Thinking of incorporating an LLM-powered browser automation feature into your own project? This one's great for quickly evaluating how well the latest tooling and models support your use case

- Vy - An entrant in the different but related category of full computer use agents. Vy runs on your desktop, not in your browser. Based on my brief experience, I’d say this approach ups the risk factors without providing meaningful additional value.

I’ll be back next week to talk about OpenAI DevDay and maybe even MCP, too. Subscribe here to get the next issue delivered straight to your inbox.