Issue no. 2: “Wide” coding

Welcome to issue no. 2 of the Implausible AI newsletter!

Each issue starts off with an update on my work plus a handful of recent links I found insightful. Then I dive deep into a specific AI topic. Last week we checked in on the “vibe coders”. This week, we’re looking at how professional engineers are putting AI coding tools to work in 2025.

My thanks to all of you who subscribed last week and shared your feedback. Not subscribed yet? You can sign up here.

Let’s dive in!

My work 👨💻

Last week Elle Grossenbacher and I talked do’s and don’ts at Developer Marketing Summit.

This Friday I’ll be speaking to François Dufour’s Product-Led Leaders group about how AI changes the ways engineers find, adopt, and implement developer products. I’ve got 10 invites to share with Implausible subscribers. Interested? Hit “reply” or email me at andrew@implausible.ai.

Andrew’s picks 🔍

A handful of insightful links which earned a spot in my notes this week:

Why “agents” aren’t happening yet

This piece from Steve Newman and the folks at the Golden Gate Institute for AI is a great round up of how even the most capable models still struggle when encountering the messiness of the “real world.”

A great read if you’re asking yourself “wait, aren’t the agents supposed to be here by now?”

Getting LLMs more comfortable saying “I don’t know”

In last week’s issue I mentioned how LLM’s inherent misunderstanding of their own limitations is one reason why AI coding agents are prone to hacky workarounds. That’s annoying when working with code, but hallucinations are a bigger problem when consumers use AI models.

OpenAI suggests in this paper there might be a way to make progress on hallucinations yet.

ChatGPT now supports MCP servers

Model Context Protocol (MCP) is an open-source standard for connecting AI applications to external systems. An MCP server is that connection "glue code," provided to you by a third-party developer.

The dream? MCP servers are the open source and vendor-provided tools that turn an LLM into an AI agent. The fear? That they’ll be the defining infosec attack vector of the AI era.

OpenAI added MCP support to ChatGPT last week as an opt-in capability, presented for now as a developer feature.

I am excited about the possibilities here, but unfortunately it seems inevitable that MCP support in the most popular AI chatbot will attract scammers in the near term. Install your MCP servers judiciously!

A hundred ways to answer “What time is it?”

Five years ago I launched Gator, the Slack app I built to send smart scheduled messages before Slack added its own (more basic!) built-in scheduler. 300,000 Gator messages later, it’s safe to say I’ve thought a lot about time zones these past few years.

Ever since stumbling across this developer’s passion project, I can’t stop thinking about swapping Gator to use one of these other calendars for April Fools' 2026.

“Wide” coding 💻

In 2025 AI coding tools have become significantly more capable of working independently. That sheds new light on an important question: if an AI coding agent can author reasonable-quality code without close supervision, what’s the best way to put it work?

Last week we explored the first half of the answer to that question, checking in on the vibe coders and their results building valuable software without firsthand engineering skills.

Today, we’ll look at how professional software engineers are deploying AI coding tools to scale their own work.

2024: AI-assisted coding within the IDE

Last year most AI-assisted coding was still happening within a developer’s Integrated Development Environment (IDE).

Developers who have been using, say, Visual Studio Code for years could flip a switch and turn on GitHub Copilot, Microsoft’s AI coding assistant. The developer gets to keep all their familiar tooling and customizations, but the autocomplete feature becomes more intelligent and there’s a new chat UI. An AI early adopter might use something like Cursor or Windsurf instead. Those tools offer a more AI-native UX, but are still full-fledged IDEs (which they achieve by leveraging VS Code’s underlying open source project).

It made sense for AI coding tools to start this way for both product and distribution reasons. On the product front, in 2024 LLMs were getting better at writing code but still needed supervision for most tasks. Reviewing and correcting the LLM’s output worked best inside an IDE. On the distribution front, an IDE is the most important tool in a professional engineer’s workflow and it’s also easily extensible. Augment Code, for example, packages their entire product inside an extension, not a standalone application like Cursor or Windsurf.

Meanwhile, vibe coding startups and some open source projects were experimenting with other UX approaches for pointing LLMs at coding tasks. But the defining aspects of a professional engineer’s process still felt pretty familiar.

Can you turn a 1x engineer into a 10x engineer?

Things changed when Anthropic released Claude Code in early 2025. Claude Code ditched the IDE and runs as a command line tool instead.

Claude Code first got attention from developers because its clever design seemed to get better mileage out of the same models compared to when they were used in other coding tools. When Anthropic released Claude 4 Sonnet and Opus in May, developers noticed that with these new models Claude Code’s work didn’t need revisions as often. In this workflow, developers usually still review code carefully before it is merged and deployed. But for the first time, it seemed possible that a lightly-supervised coding agent could produce close-to-production-quality results. Packaging that tool as a CLI meant there was no limit to how many a developer could run at the same time.

Developers live to automate, and so early adopters raced to figure out the best way to orchestrate and parallelize CLI-based coding agents.

This was around the time I experienced my personal “we’re never going back” moment, when I realized it was more productive for me to spell out detailed requirements for Claude Code and let it crank for 5+ minutes at a time vs. authoring the changes myself in smaller chunks. I often link clients to this post from Thomas Ptacek, which describes why tools like Claude Code are worth a try even for engineers who are AI skeptics.

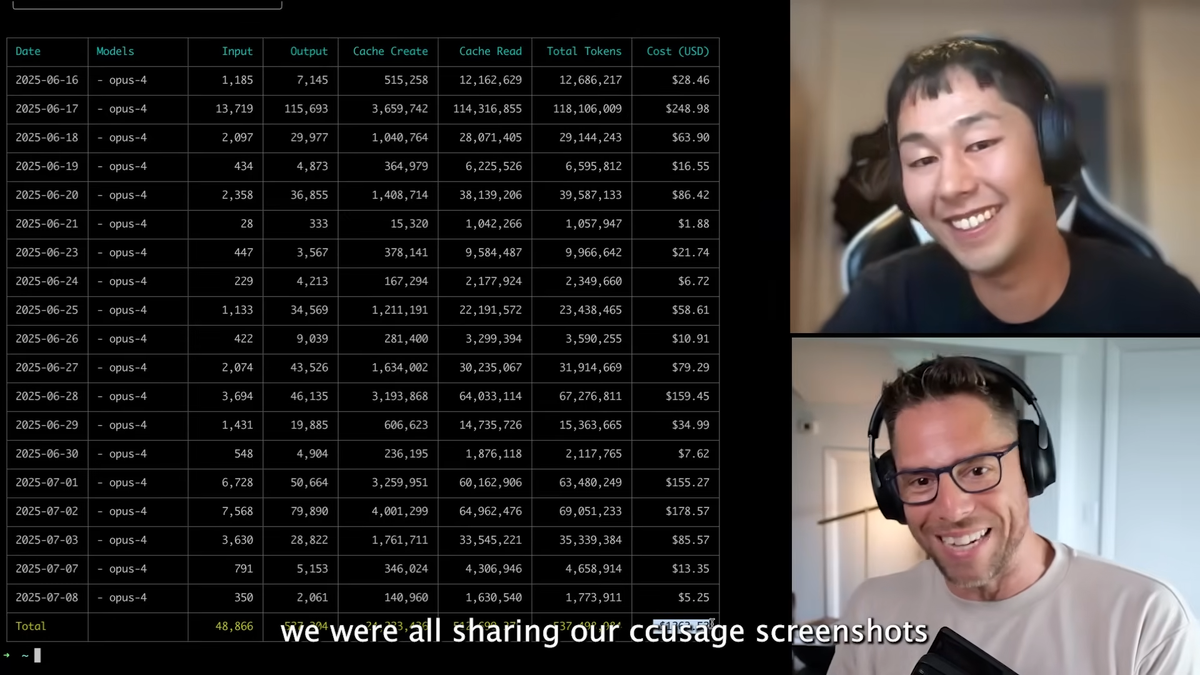

In a stroke of packaging brilliance / folly, Anthropic included Claude Code in its $100 per month Claude Max plan, allowing developers to use Claude Code at a significant discount to the Anthropic API’s usage-based pricing. Developers were quick to discover techniques for maximizing their usage - and bragging about it. My friend Greg did a great interview with the Ryotaro Kimura, the creator of ccusage, a trendy open source tool for analyzing your Claude Code usage.

Anthropic tweaked its rate limits in July, ending the gravy train for the token-bragging crowd. But this spring and summer proved the potential of lightly-supervised AI coding agents: OpenAI, Cursor, and others have all since launched their own similar CLI-based tools.

So how do you herd the cats best?

This space is moving fast, with new tools and best practices every week. But there are a few early pearls of wisdom for forward-thinking R&D teams:

Unsurprisingly, lightly-supervised coding agents do best on straightforward tasks, like addressing breaking changes stemming from an upgrade in one of your project’s dependencies. Next, the better your test coverage is, the quicker you’ll be able to verify that an AI-generated change to your code won’t come with any unwanted side effects or regressions. When you do point them at more complex tasks, you’ll get better results with more detailed requirements (and even then, maybe plan to receive a “first draft” vs. a finished product).

And just because AI coding agents can increasingly work independently, it doesn’t mean that’s the right technique for every task in an engineer’s inbox. I loved this post from an engineer at Sanity with practical recommendations for using Claude Code while still working primarily on one task at a time.

Finally, keep an eye on tooling in this space. Between the large labs’ own tools, startups aimed squarely at this problem, and the open source community, there are bound to be plenty of options.

Here are just a handful which I’ve personally seem demos for recently:

- Conductor - “Run a bunch of Claude Codes in parallel”

- cmux - “Orchestrate AI coding agents in parallel”

- Omnara - Control Claude Code on your desktop via your mobile device (works not via the Claude Code SDK, but by scraping the terminal output 🤯)

- Vibe Kanban - “Kanban board to manage your AI coding agents”

Next week 📆

That’s it for this week’s issue. Got thoughts? I’d love to hear them. Just hit reply or send me a note at andrew@implausible.ai.

Subscribe here to get next week’s issue delivered straight to your inbox.